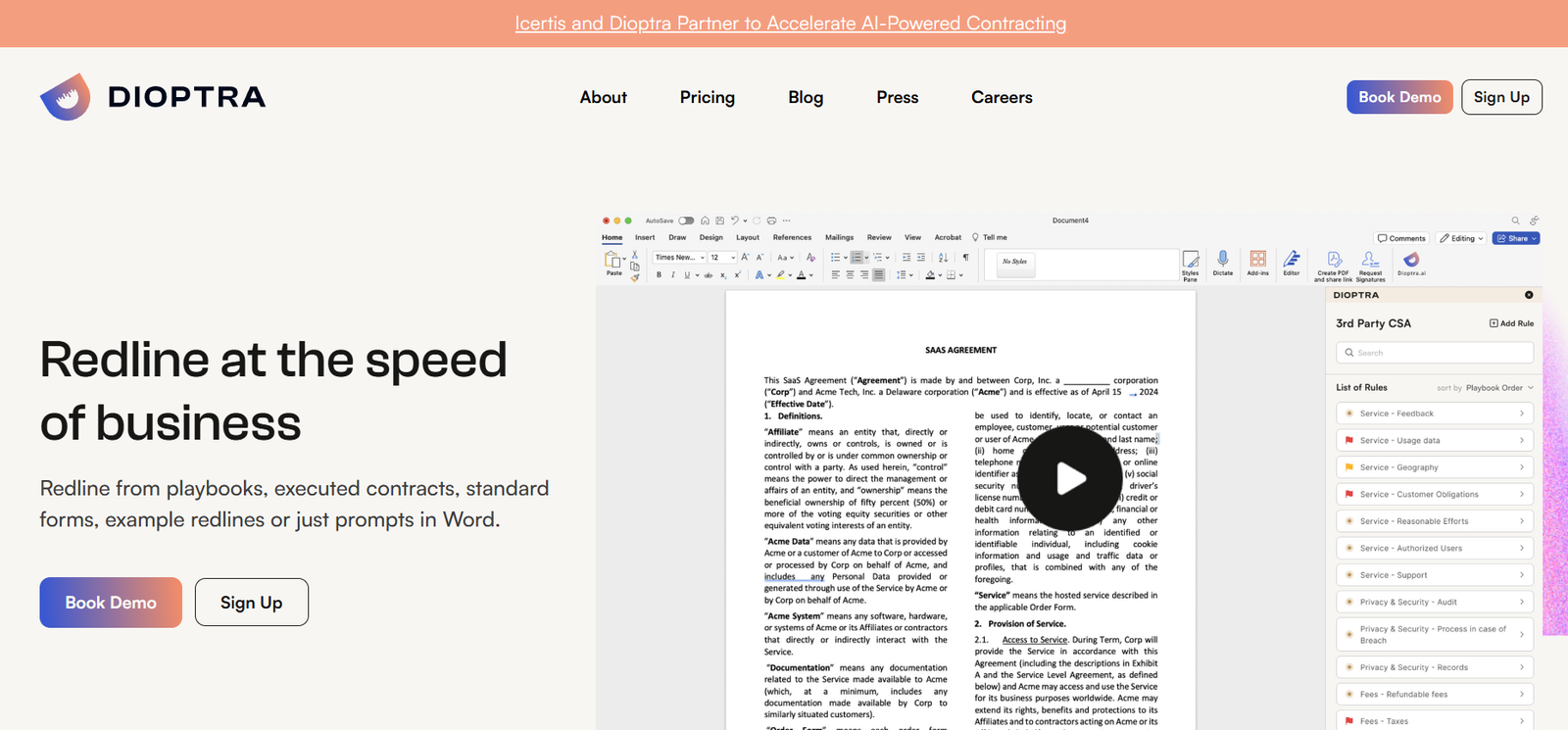

Dioptra AI is a cutting-edge platform designed to help developers, researchers, and organizations evaluate and monitor the performance of large language models (LLMs) and generative AI systems. As the use of LLMs becomes widespread across industries, ensuring the quality, accuracy, and safety of these models is more important than ever.

Dioptra AI offers a comprehensive solution for testing LLM outputs, benchmarking performance across different models, identifying edge cases, and integrating continuous evaluation into the AI development lifecycle. By delivering detailed insights into model behavior, Dioptra AI empowers teams to build more trustworthy, explainable, and efficient AI systems.

Whether you’re building an AI-powered product, deploying models in production, or conducting research, Dioptra AI provides the tools you need to validate and optimize your AI models with confidence.

Description

Dioptra AI is a purpose-built evaluation platform for LLMs and generative AI tools. It allows teams to assess model responses against defined metrics such as accuracy, relevance, safety, and consistency. The platform supports automated testing, side-by-side model comparison, and prompt engineering—all within an intuitive interface that requires minimal setup.

Built by AI practitioners for AI practitioners, Dioptra is designed to support rigorous evaluation standards in the fast-moving world of machine learning. It bridges the gap between model development and responsible deployment by making LLM evaluation a first-class citizen in the AI stack.

Dioptra AI is cloud-based, collaborative, and model-agnostic—allowing users to evaluate open-source models, proprietary LLMs, and APIs from major providers like OpenAI, Anthropic, Cohere, or Mistral.

Features

Dioptra AI includes a robust set of features tailored to AI development and evaluation workflows:

Model Evaluation Suite: Run structured evaluations of LLM outputs based on custom test cases, scoring rubrics, or AI-based grading.

Side-by-Side Comparison: Compare outputs from multiple models in a unified interface to determine which performs best on specific tasks.

Prompt Testing and Optimization: Iterate on prompts and view response variations across different models to refine instructions and improve outcomes.

Automated Grading and Feedback: Use AI-assisted grading to assess answers based on pre-defined benchmarks or rubric-based scoring.

Dataset Upload and Management: Import datasets for evaluation, including prompts, expected outputs, and test metadata.

Collaborative Review: Invite team members to participate in evaluation, leave feedback, and co-grade responses.

Continuous Monitoring: Set up ongoing tests to detect regressions or monitor model behavior over time as you deploy new versions.

Analytics Dashboard: Visualize model performance, error rates, and improvement trends with interactive charts and metrics.

Multi-Model Support: Evaluate and compare results from different LLMs and APIs, including OpenAI GPT-4, Claude, Llama, Cohere, and custom models.

Secure Cloud Environment: Dioptra runs on secure cloud infrastructure with data privacy and user access controls.

API Access: Integrate Dioptra into your CI/CD or model deployment pipelines for automated testing and monitoring.

How It Works

Dioptra AI simplifies the process of testing and validating large language models through a streamlined workflow:

Step 1 – Create a Project: Users begin by creating a project that defines the purpose and scope of evaluation (e.g., customer support chatbot, coding assistant, summarization model).

Step 2 – Upload Test Cases: Users upload prompt-response pairs or test datasets. Dioptra supports CSV, JSON, and API-based data input.

Step 3 – Choose Models: Select one or more models to evaluate—whether APIs like GPT-4 or Claude, or self-hosted open-source models.

Step 4 – Define Evaluation Metrics: Choose from built-in metrics like correctness, coherence, safety, and helpfulness, or define custom rubrics.

Step 5 – Run Evaluations: Dioptra executes the evaluation process, collects outputs, and scores them using either human review, AI grading, or both.

Step 6 – Analyze Results: View performance metrics in the dashboard, compare models side-by-side, and identify areas for improvement.

Step 7 – Monitor and Iterate: As models or prompts are updated, re-run evaluations to track changes and avoid regressions.

Dioptra’s interface is designed for fast iteration, making it ideal for teams practicing agile AI development.

Use Cases

Dioptra AI is designed for any organization building or deploying language models, including startups, enterprises, research labs, and AI product teams. Key use cases include:

Model Benchmarking: Compare commercial and open-source LLMs for a specific use case to select the best model for deployment.

Prompt Engineering: Fine-tune prompts and instructions by testing them across different models and seeing which performs best.

Regression Testing: Automatically test new model versions against a baseline to ensure quality is maintained or improved.

Quality Assurance for AI Products: Evaluate how LLMs behave in specific product contexts such as chatbots, summarizers, or code generators.

AI Safety and Risk Assessment: Test for unsafe or biased outputs and document results for compliance and risk mitigation.

Academic Research: Use Dioptra to evaluate model responses across tasks such as reasoning, knowledge retrieval, or translation.

Enterprise AI Teams: Ensure that internal AI tools deliver reliable, consistent outputs before full-scale deployment.

LLMOps and MLOps: Integrate Dioptra into the model lifecycle for continuous testing and performance tracking.

Pricing

Dioptra AI currently offers early access pricing, and detailed pricing plans are typically customized based on team size, usage volume, and enterprise needs.

Pricing tiers generally include:

Free Trial:

Limited number of evaluation runs

Access to core features for individual users

Limited model comparisons

Ideal for personal use or experimentation

Team Plan (Contact for pricing):

Unlimited projects and evaluations

Collaboration features

API access

Priority support

Ideal for startups and small teams

Enterprise Plan (Custom pricing):

Full platform access

Dedicated cloud instance

Advanced security and compliance features

Custom SLAs and support agreements

Integration with enterprise systems

To get the most accurate pricing for your organization, Dioptra encourages users to request a demo or contact the team at https://www.dioptra.ai

Strengths

Focused on LLM Evaluation: Dioptra fills a critical gap in AI development by offering a specialized platform for structured model testing.

Model-Agnostic: Works with any language model—open-source, proprietary, or commercial—without locking users into a single ecosystem.

Collaborative Workflows: Supports teams through shared workspaces, grading, feedback, and version control.

Real-Time Comparison: See differences in model behavior instantly with side-by-side evaluations.

Customizable Metrics: Users can define their own scoring rubrics and tailor evaluations to specific business needs.

Continuous Monitoring: Ideal for regression testing and lifecycle management in production AI systems.

Built for AI Developers: Designed by practitioners for hands-on use during model development, testing, and deployment.

Security Focused: Data privacy and secure infrastructure ensure that sensitive evaluation data is protected.

Drawbacks

Limited Public Pricing: Lack of transparent pricing on the website may require extra steps for evaluation or budgeting.

Niche Audience: Primarily useful for teams already building with or deploying LLMs—not a general-purpose AI tool.

Requires Test Dataset Setup: Users must prepare structured test cases or prompts in advance, which may require upfront effort.

Still Evolving: As a relatively new tool, some enterprise features may still be in development or limited to select users.

Comparison with Other Tools

Dioptra AI competes with a growing number of tools in the LLMOps and AI evaluation space. Key comparisons include:

TruEra: Focuses on explainability and performance monitoring across AI models but is less specialized in LLM-specific prompt testing.

Humanloop: Offers prompt optimization and A/B testing. Dioptra provides deeper evaluation workflows and scoring customization.

PromptLayer: Tracks prompt usage and performance in production. Dioptra focuses more on structured evaluation and pre-deployment testing.

LangSmith (from LangChain): Built for prompt debugging and tracing, tightly integrated with LangChain ecosystem. Dioptra is more model-agnostic and evaluation-focused.

Helicone: Focuses on LLM monitoring and analytics. Dioptra goes further into structured testing and comparison workflows.

Dioptra’s strength lies in its dedicated focus on structured, repeatable LLM evaluation workflows—making it a vital addition to any serious AI development pipeline.

Customer Reviews and Testimonials

While Dioptra AI is still expanding its user base, early feedback from AI developers and researchers has been positive:

“Dioptra helps us test LLMs like we test software—systematically and at scale.”

“Being able to compare Claude and GPT-4 side by side on our own data is a game changer.”

“We’ve integrated Dioptra into our release process to catch regressions before deployment.”

As more teams adopt LLMs in production, the demand for platforms like Dioptra is expected to grow rapidly, and formal reviews are likely to follow on platforms like G2 and Product Hunt.

Conclusion

Dioptra AI is a specialized platform designed to meet the growing need for structured evaluation and monitoring of large language models. With support for model comparisons, prompt testing, AI grading, and continuous monitoring, Dioptra helps AI teams build better, safer, and more effective models—faster.

As AI adoption expands across industries, tools like Dioptra will be essential for maintaining quality, transparency, and accountability in AI-powered systems.