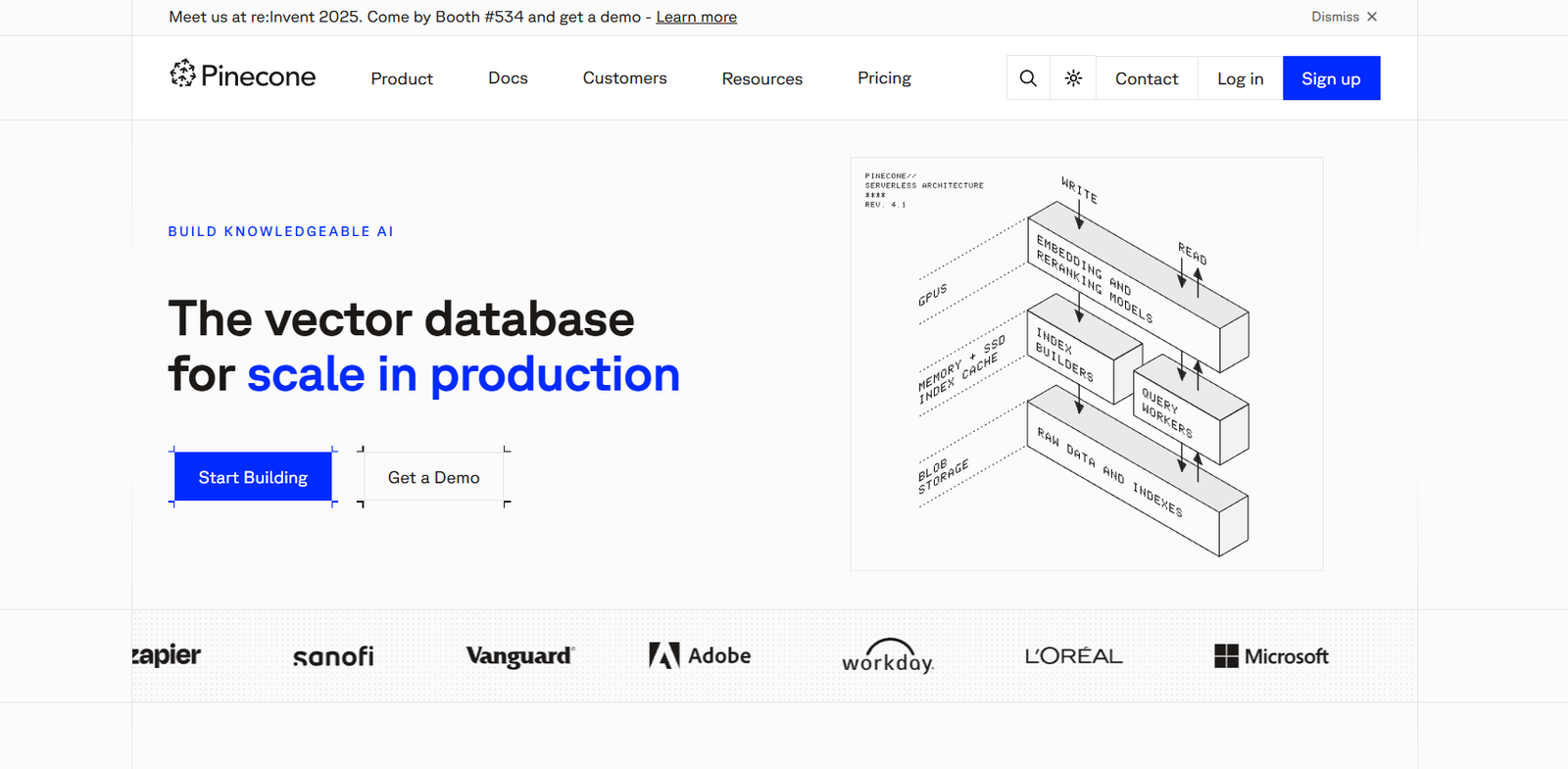

Pinecone is a fully managed vector database designed for building high-performance, scalable applications with similarity search capabilities. It allows developers to store, index, and query vector embeddings—numerical representations of unstructured data such as text, images, and audio—at scale. Pinecone eliminates the complexity of managing vector infrastructure by offering real-time, production-ready vector search through a simple API.

Built specifically for AI and machine learning workloads, Pinecone is ideal for powering features like semantic search, recommendation engines, personalization, anomaly detection, and more.

Features

Pinecone offers enterprise-grade vector search functionality, packed with features tailored for modern AI applications:

Fully Managed Infrastructure: No setup or maintenance required—Pinecone handles scaling, replication, and uptime.

High-Performance Vector Search: Perform similarity search on millions to billions of vectors with sub-second latency.

Real-Time Updates: Insert, update, or delete vectors with immediate effect, supporting dynamic and real-time applications.

Hybrid Search Capabilities: Combine vector similarity with metadata filtering to deliver more accurate results.

Namespace Partitioning: Organize and isolate data within different environments or use cases.

Scalability: Built to scale automatically with your data and query demands, without performance degradation.

Up-to-Date Indexing: Vectors are always searchable with no need for re-indexing or downtime.

Secure and Compliant: Enterprise-grade security with end-to-end encryption, SOC 2 compliance, and role-based access control.

Multi-Region Deployment: Deploy vector indexes closer to users for reduced latency.

Optimized for Embeddings: Seamlessly integrates with popular ML frameworks and embedding providers like OpenAI, Cohere, Hugging Face, and more.

Pinecone focuses entirely on vector search, offering specialized performance and reliability for this specific use case.

How It Works

Pinecone works by indexing vector embeddings—typically generated by large language models (LLMs) or deep learning systems—and enabling similarity search through an API. The workflow typically follows these steps:

Generate Embeddings: Use an embedding model (e.g., OpenAI, Cohere, Sentence Transformers) to convert unstructured data into vector format.

Insert Vectors: Send these vectors to Pinecone via API, along with optional metadata.

Query the Index: Perform vector similarity searches (e.g., nearest neighbor) using another embedding, optionally filtered by metadata.

Get Results: Pinecone returns the closest vectors (and associated metadata), enabling semantic search or recommendations.

Pinecone handles the indexing, optimization, and querying behind the scenes, ensuring consistent performance and uptime.

Use Cases

Pinecone is purpose-built for AI-first applications that rely on semantic understanding and similarity search:

Semantic Search: Power AI-driven search engines that understand context and meaning instead of keyword matching.

AI Chatbots and RAG: Store embeddings of documents or knowledge bases to enable retrieval-augmented generation (RAG) with LLMs.

Recommendation Systems: Deliver personalized content or product suggestions based on user similarity.

Fraud and Anomaly Detection: Identify unusual patterns in large datasets through vector comparisons.

Image and Audio Search: Perform similarity search across multimedia content using embeddings.

E-commerce Search: Improve product discovery by matching user intent with relevant listings.

Content Deduplication: Identify and remove duplicate records based on semantic similarity.

Pinecone is widely adopted in industries such as tech, finance, ecommerce, and healthcare to enhance AI capabilities.

Pricing

Pinecone offers a usage-based pricing model with multiple service tiers, allowing developers to scale as needed. As of the latest update:

Starter (Free):

1 project, 1 pod, 1 namespace

1 million vector capacity

Best for testing and prototyping

Standard:

Pay-as-you-go model based on pod usage

Includes features like metadata filtering and higher query throughput

Suitable for growing applications

Enterprise:

Custom pricing

Includes SLAs, dedicated support, VPC deployment, and compliance features

Designed for mission-critical and large-scale deployments

Pricing depends on vector dimensions, number of pods (compute units), and total vector storage. Full pricing information is available at https://www.pinecone.io/pricing.

Strengths

Pinecone’s specialized focus on vector databases offers numerous advantages:

Purpose-Built for Vector Search: Optimized specifically for high-speed, scalable similarity queries.

Fully Managed: No DevOps burden—just plug in and start building.

Fast and Scalable: Maintains low-latency performance at scale, even with billions of vectors.

Rich Filtering: Combines semantic and structured filtering for better results.

Production-Ready: Enterprise-grade availability, uptime, and reliability.

Developer-Friendly: Well-documented APIs and SDKs make integration simple.

These strengths make Pinecone one of the most reliable vector databases in the AI ecosystem.

Drawbacks

Although Pinecone is best-in-class for vector search, there are some considerations:

Focused on One Use Case: It’s not a general-purpose database; it complements but doesn’t replace traditional data stores.

Limited Offline Access: As a fully managed cloud solution, it requires internet access and cannot be self-hosted.

Pricing at Scale: While efficient, costs can rise with high-volume or enterprise-scale workloads.

Still, for teams building AI-powered applications, Pinecone’s trade-offs are justified by performance and ease of use.

Comparison with Other Tools

Pinecone is often compared with other vector databases and retrieval solutions:

vs. FAISS: FAISS is a powerful open-source library but requires self-hosting, scaling, and maintenance. Pinecone is managed and scalable out of the box.

vs. Weaviate: Weaviate is open-source with more features for knowledge graphs. Pinecone is leaner and optimized purely for vector search at scale.

vs. Milvus: Milvus is robust and flexible but requires more infrastructure management. Pinecone excels in simplicity and cloud-native performance.

vs. Elasticsearch/KNN Plugin: Elasticsearch supports vector search but isn’t purpose-built for it, leading to performance limitations at scale.

Pinecone is best for teams seeking a high-performance, no-maintenance vector search service.

Customer Reviews and Testimonials

Pinecone is widely praised by developers and ML engineers for its performance and simplicity. Common feedback includes:

“Incredibly easy to get started with.”

“Handles millions of vectors without a sweat.”

“Great support and documentation.”

“Crucial for our RAG pipeline with LLMs.”

It has become a go-to solution for companies building with large language models and other AI systems, and is used by startups and enterprises alike.

Conclusion

Pinecone is a production-grade, fully managed vector database built for developers who need scalable, low-latency similarity search. Whether you’re building semantic search, recommendation engines, or retrieval-augmented generation applications, Pinecone provides the infrastructure to store and query high-dimensional vectors reliably. With strong performance, easy integration, and support for real-time AI use cases, Pinecone stands out as a leading solution in the evolving world of vector-native applications.