PAIR (People + AI Research) is a Google initiative focused on advancing the field of human-centered artificial intelligence. It explores how people can better understand, use, and interact with AI systems. The PAIR team develops open-source tools, research frameworks, and design practices aimed at improving AI interpretability, transparency, fairness, and usability.

Through collaboration between engineers, researchers, and UX designers, PAIR creates solutions that help both developers and end-users make sense of complex AI models, ultimately bridging the gap between humans and intelligent systems.

Features

PAIR encompasses a variety of tools and research artifacts, with a strong focus on interpretability and fairness in AI. Some of the key open-source tools developed under PAIR include:

What-If Tool:

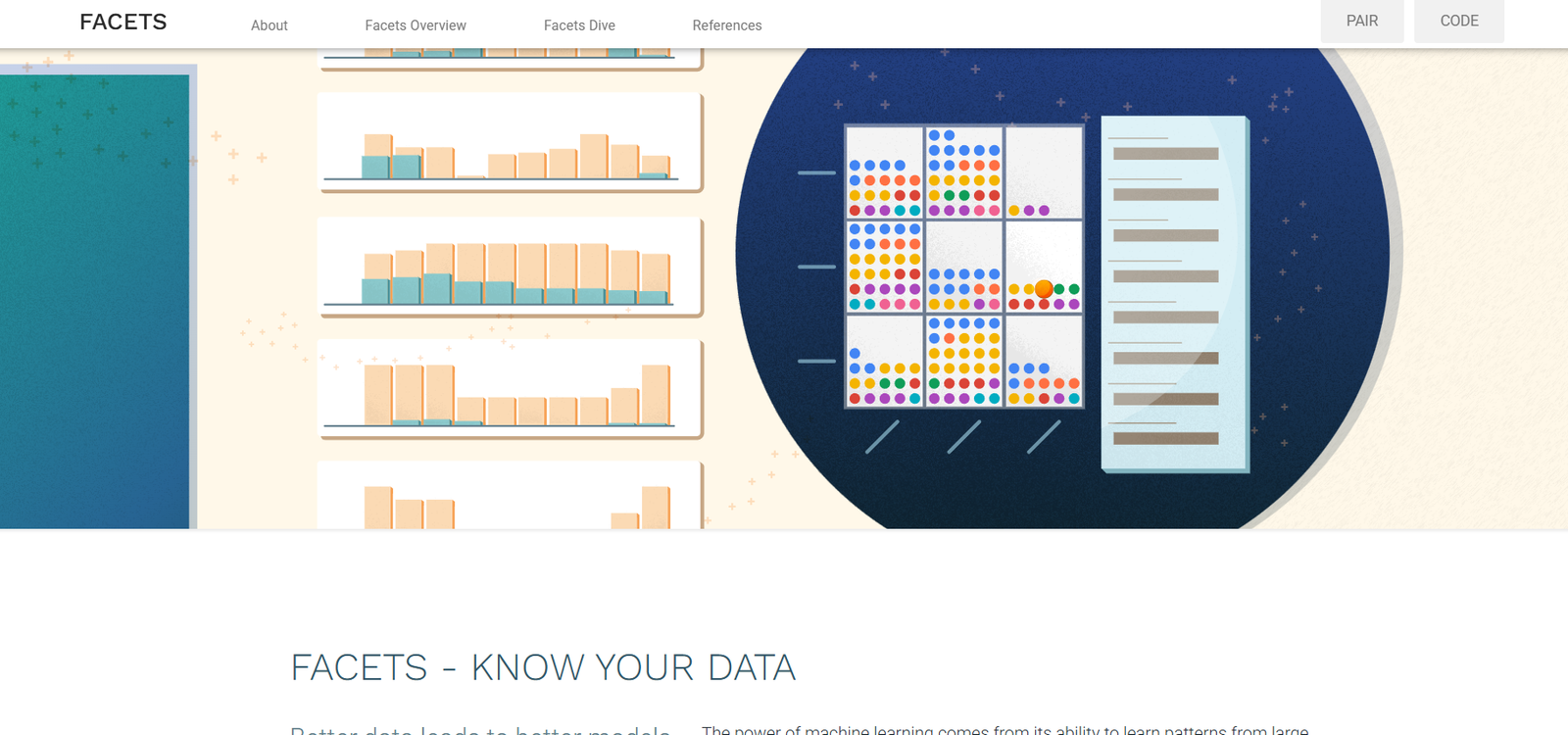

Visual interface for exploring trained machine learning models without coding. Enables users to inspect model performance, compare counterfactuals, and test fairness metrics.Facets:

A visual exploration tool for understanding machine learning datasets. Offers data slicing, distribution visualization, and dataset comparison to identify bias and outliers.TCAV (Testing with Concept Activation Vectors):

A technique for interpreting deep learning models by quantifying how user-defined concepts influence predictions.Fairness Indicators:

Tools that help measure and evaluate fairness metrics across user-defined slices of data.Language Interpretability Tool (LIT):

A platform for interpreting NLP models in real time, allowing researchers to examine embeddings, attention mechanisms, and predictions.PAIR Guidebook:

A design resource offering best practices for creating human-centered AI applications. Covers topics like explanation, feedback, trust, and user experience in AI products.

These tools are particularly useful for machine learning practitioners, data scientists, AI ethicists, and UX designers.

How It Works

Each PAIR tool operates independently but serves a common purpose: improving the human understanding of AI systems. For example:

The What-If Tool is integrated into TensorBoard or Jupyter environments and lets users visually test how a model behaves with modified input data.

Facets can be embedded in ML pipelines to provide insights into dataset quality and structure before training models.

TCAV allows engineers to test how abstract human concepts (like “smiling” or “medical equipment”) influence neural network decisions.

LIT offers live model exploration for natural language processing, helping researchers inspect individual predictions, saliency maps, and more.

All tools are open-source, typically built using Python and JavaScript, and can be integrated into existing ML workflows via GitHub repositories.

Use Cases

PAIR tools can be applied across a range of use cases:

Model Debugging: Visualize and understand incorrect predictions to improve model performance.

Fairness Auditing: Identify and measure biases across sensitive groups or data slices.

User Research: Evaluate how users interpret and trust AI outputs in real-world interfaces.

Dataset Quality Control: Detect anomalies or imbalances before training models.

Academic Research: Explore interpretability techniques for deep learning and contribute to AI ethics discourse.

NLP Model Evaluation: Use LIT to interpret embeddings, attention, and predictions in transformer-based models.

These tools are especially valuable in regulated industries like healthcare, finance, and education, where transparency and fairness are critical.

Pricing

PAIR tools are completely free and open-source, developed and maintained by Google’s People + AI Research initiative. You can:

Access code via GitHub: https://github.com/pair-code

Use tools in local environments or integrate them into custom workflows

Modify and extend the tools as needed under open licenses

There are no pricing tiers or commercial versions — the project is aimed at public research, ethical AI development, and education.

Strengths

Highly Visual Tools: Enable deep insight into model behavior without complex code.

Open-Source and Free: Completely accessible to individuals, researchers, and organizations.

Focus on Ethics: Built with fairness, accountability, and transparency in mind.

Backed by Google Research: Developed and maintained by a leading team of AI and UX experts.

Broad Integration: Compatible with TensorFlow, Jupyter, and other ML environments.

Well-Documented: Comprehensive guides and examples available across tools.

These strengths make PAIR tools a go-to resource for anyone working on responsible AI development.

Drawbacks

Focused on TensorFlow: Some tools are optimized for TensorFlow and may require workarounds for other frameworks like PyTorch.

Not End-to-End Platforms: These are utilities rather than full-scale ML pipelines.

Steep Learning Curve for Some Tools: Understanding advanced interpretability techniques (e.g., TCAV) may require prior ML knowledge.

Limited Business Support: No commercial SLAs or enterprise support, as these are research-focused tools.

Despite these limitations, PAIR tools provide invaluable insight into how AI systems operate.

Comparison with Other Tools

PAIR tools stand apart from traditional ML platforms by focusing specifically on human-AI interaction:

Compared to SHAP or LIME: Tools like SHAP and LIME offer local explainability; PAIR tools like TCAV and LIT extend this to high-level concepts and NLP interpretation.

Compared to IBM AI Fairness 360: IBM’s toolkit is broader in auditing but less focused on user interaction and UX design.

Compared to Weights & Biases: W&B is aimed at experiment tracking; PAIR tools are more focused on interpretability and transparency.

Overall, PAIR complements — rather than replaces — these platforms by providing insights that deepen understanding and promote ethical development.

Customer Reviews and Testimonials

While there are no traditional customer testimonials (since the tools are free and open-source), PAIR tools are widely cited in academic research, AI ethics case studies, and machine learning conferences. The What-If Tool and Facets, in particular, have received positive community feedback for their ease of use and insightful visualizations.

Researchers and engineers value the ability to perform introspective analysis without writing extra code, while UX teams use PAIR’s design principles to improve the transparency of AI-powered products.

Conclusion

PAIR (People + AI Research) is a visionary project from Google that brings ethical, transparent, and interpretable AI within reach of both technical and non-technical users. Through tools like the What-If Tool, Facets, TCAV, and LIT, PAIR empowers researchers and developers to explore, debug, and explain AI systems with confidence. For anyone working in AI — especially in fairness, ethics, or interpretability — PAIR provides a foundational set of tools to build more responsible and human-centered AI systems.