Sesterce Cloud is a high-performance cloud computing platform designed for AI developers, startups, and enterprises seeking scalable GPU infrastructure without the usual complexity. Created by the team behind Sesterce, known for simplifying access to high-performance computing, Sesterce Cloud focuses on delivering GPU-accelerated computing power optimized for AI, machine learning, and data science workloads.

Unlike traditional cloud platforms that often require in-depth configuration and DevOps expertise, Sesterce Cloud offers a simplified interface for launching GPU instances, managing workloads, and collaborating with team members. It’s built for speed, flexibility, and developer-friendliness—making it ideal for businesses that want to train large models, run inference jobs, or experiment with AI systems at scale.

Whether you’re a researcher needing occasional GPU access or a business looking to scale production AI applications, Sesterce Cloud provides the performance, cost-efficiency, and ease of use to support both individual and team-based projects.

Features

Sesterce Cloud comes with a robust set of features tailored to AI and machine learning use cases:

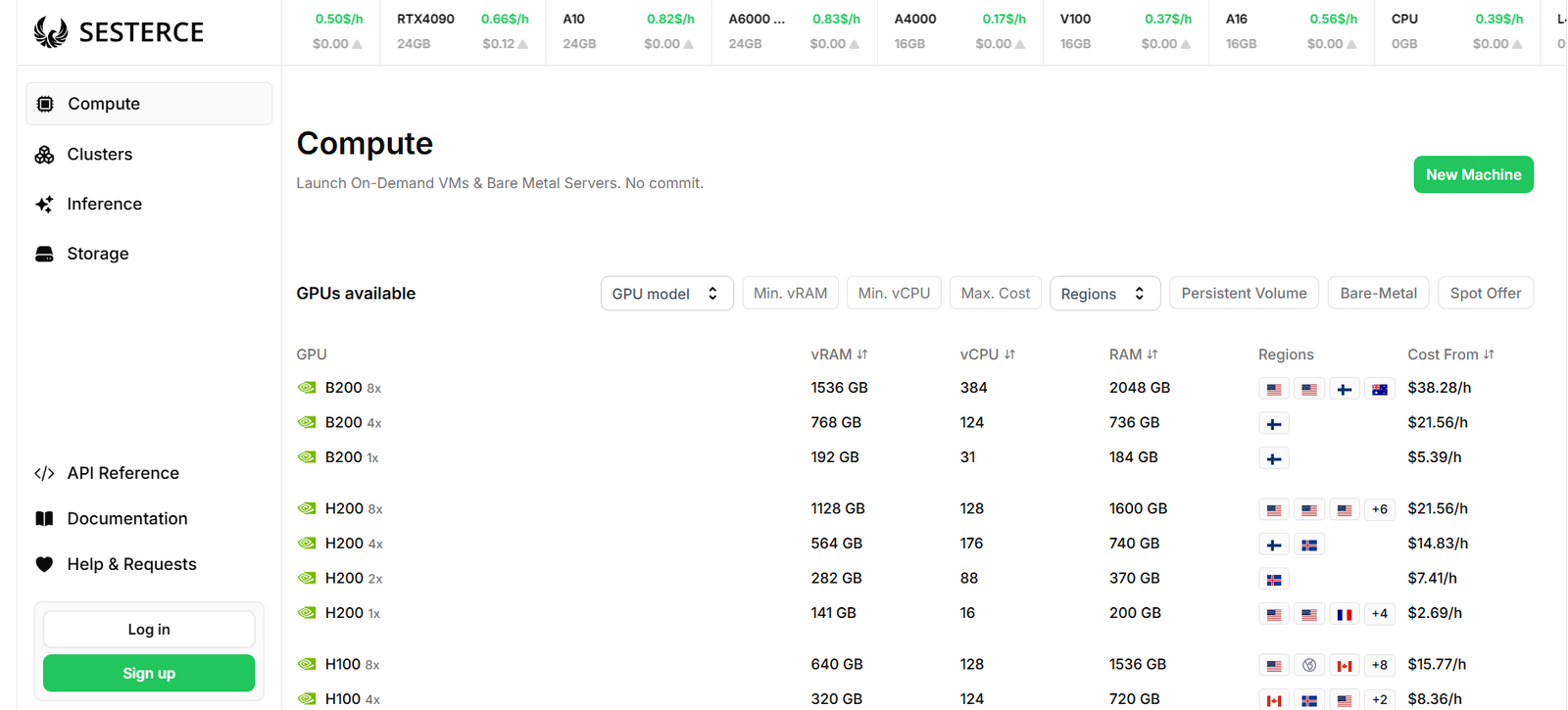

GPU-Accelerated Infrastructure: Access to powerful GPU instances equipped with NVIDIA A100, H100, and other high-performance chips suitable for deep learning and generative AI workloads.

Pre-configured Environments: Quickly spin up instances with pre-installed frameworks such as PyTorch, TensorFlow, and Jupyter Notebooks.

Team Collaboration: Share cloud instances, manage resources, and collaborate with team members in real time.

On-Demand and Reserved Instances: Choose between flexible on-demand usage or reserve long-term GPU capacity to optimize costs.

Multi-Region Availability: Deploy workloads globally across multiple data centers for improved latency and redundancy.

Simple UI and API Access: Launch and manage workloads via a user-friendly dashboard or automate deployments using the Sesterce Cloud API.

Secure Networking and Storage: Each instance is provisioned with isolated environments, encrypted storage, and secure networking to ensure data protection.

Usage-Based Billing: Transparent pricing based on actual resource consumption, with no hidden fees or overage penalties.

How It Works

Sesterce Cloud is designed to simplify the typical friction points of cloud infrastructure management, particularly for AI workloads. The platform allows users to get started in a few straightforward steps:

Create an Account: Sign up for Sesterce Cloud using your email or GitHub account.

Choose a Machine Type: Select from available GPU-powered virtual machines based on your performance and budget requirements.

Deploy with One Click: Launch instances with pre-installed ML frameworks or customize your setup based on your environment needs.

Work and Collaborate: Use terminal access, JupyterLab, or remote desktop tools to manage your projects and collaborate with your team in real time.

Scale as Needed: Add more compute power on demand or set up automated scaling based on workload intensity.

Monitor Usage: Track GPU usage, billing, and system metrics directly from the dashboard.

The platform emphasizes ease of use, making it possible for AI engineers, researchers, and teams to go from signup to training models in minutes.

Use Cases

Sesterce Cloud supports a wide variety of use cases where GPU computing is essential:

Model Training: Train deep learning models such as LLMs, GANs, and CNNs using high-performance NVIDIA GPUs.

Inference at Scale: Run production-level inference on large models with minimal latency.

AI Research: Ideal for researchers needing short-term or scalable access to advanced GPU infrastructure.

Generative AI: Supports tools and pipelines for generating images, audio, or text using diffusion models and transformer-based architectures.

Data Science Projects: Perform data preprocessing, visualization, and machine learning using Jupyter or Python environments.

Fine-Tuning and Experimentation: Rapidly test different model configurations and fine-tune open-source models using scalable GPU resources.

Academic Collaboration: Enable university teams or student groups to access GPU resources without managing hardware infrastructure.

Pricing

Sesterce Cloud uses a transparent usage-based pricing model. Pricing varies depending on the type of GPU and machine configuration selected. Below are typical options available, based on public information on the Sesterce Cloud platform:

A100 Instances:

Starting at approximately $2.50 per hour

Suitable for large-scale training and inference

RTX 4090 or 3090 Instances:

Range from $0.80 to $1.50 per hour

Great for developers and researchers with moderate to high compute needs

CPU-Only Instances:

Available for light workloads or orchestration tasks

Cost-effective starting at under $0.50 per hour

Storage and Bandwidth:

Storage is charged based on usage, with transparent GB pricing

Bandwidth costs depend on data egress but are competitively priced for AI workloads

Sesterce Cloud also offers discounts for reserved usage, as well as enterprise plans for high-volume clients. All pricing is clearly listed in the dashboard for users to estimate and manage their expenses effectively.

Strengths

Powerful GPUs optimized for machine learning and generative AI

Transparent, usage-based pricing without hidden fees

Pre-configured environments reduce setup time

Easy-to-use dashboard suitable for beginners and teams

High availability across global regions

Team features and API access for automation

Ideal for both training and inference workloads

Drawbacks

Limited support for non-AI use cases compared to general-purpose cloud providers

Still evolving compared to mature platforms like AWS or GCP

May lack some advanced orchestration tools found in enterprise platforms

Smaller ecosystem and community compared to legacy cloud providers

Primarily focused on GPU compute; not ideal for broad infrastructure needs

Comparison with Other Tools

Compared to major cloud providers like AWS, Google Cloud, and Azure, Sesterce Cloud offers a more focused and simplified experience tailored for AI users. While platforms like AWS EC2 offer a wider variety of services, they also come with a steeper learning curve and more complex pricing.

In contrast, Sesterce Cloud is more approachable, with a user-friendly interface and pricing that appeals to developers, researchers, and small teams. Unlike Colab or Kaggle, which offer limited free GPU access, Sesterce Cloud is built for professional and enterprise-grade projects, allowing scalable training and production-ready deployments.

When compared to platforms like RunPod or Lambda Labs, Sesterce Cloud stands out for its simplicity, team collaboration features, and transparent billing. It’s especially appealing to those who want to launch and manage AI workloads quickly without the operational complexity of larger cloud platforms.

Customer Reviews and Testimonials

While formal testimonials are limited on the public site, early adopters have highlighted several benefits of using Sesterce Cloud. Users appreciate the straightforward onboarding, reliable GPU availability, and fair pricing structure.

Feedback from developers on social media and startup communities mentions that Sesterce Cloud reduces the hassle of configuring AI environments, especially for teams building LLM applications or running fine-tuning jobs.

Some customers also note the responsive support team and clear documentation as added advantages when compared to more complex providers.

As the platform grows, user reviews are expected to expand, particularly among AI startups and academic research groups.

Conclusion

Sesterce Cloud is a powerful, user-friendly cloud platform built specifically for AI, machine learning, and data science workloads. Its focus on GPU-accelerated infrastructure, simplicity, and transparent pricing makes it a compelling choice for teams who need reliable performance without the overhead of managing traditional cloud platforms.

Whether you’re an AI researcher, developer, or growing tech company, Sesterce Cloud offers the tools to launch, train, and scale models with ease. With pre-configured environments, collaborative features, and enterprise-ready hardware, it fills the gap between costly cloud services and DIY infrastructure.

As AI applications continue to expand, platforms like Sesterce Cloud will be essential in democratizing access to compute power while keeping costs and complexity in check.