Hume AI is a research-driven technology company building emotionally intelligent AI models that understand and respond to human expression. By leveraging neuroscience, machine learning, and affective computing, Hume AI creates tools that help machines interpret human emotional states through voice, facial expression, and language.

Unlike traditional AI systems that focus solely on logical inputs and outputs, Hume AI seeks to understand the underlying human experience, making interactions with machines more empathetic, personalized, and emotionally aware. Their mission is rooted in ethics and scientific rigor, developing AI that enhances—not replaces—human well-being.

Founded by Dr. Alan Cowen, a former researcher at Google and the University of California, Berkeley, Hume AI is at the forefront of human-centric artificial intelligence, blending psychology, linguistics, and deep learning to build expressive, responsive systems.

Features

1. Emotion AI API (Empathic Voice Interface)

Hume AI’s flagship product is the Empathic Voice Interface (EVI), an API that allows developers to analyze vocal tone and extract emotional expressions like happiness, stress, curiosity, sadness, frustration, and more. It processes audio in real-time and returns scores for a wide range of emotional cues, enabling more dynamic and responsive applications.

2. Multimodal Emotion Recognition

Hume AI can combine inputs from voice, facial expressions, and language to build a complete emotional profile. This multimodal approach increases accuracy and contextual awareness in applications like healthcare, customer service, and virtual assistants.

3. Expressivity Model

Hume’s expressivity models capture subtle nonverbal signals from tone, pitch, cadence, and rhythm of speech. These insights go beyond sentiment analysis by detecting nuanced emotions in how something is said, not just what is said.

4. Emotionally Adaptive Interfaces

Applications built with Hume AI can adapt responses based on a user’s emotional state. This can help virtual agents become more empathetic, educational tools more personalized, and mental health apps more supportive.

5. Scientific Emotion Taxonomy

Unlike generic sentiment models, Hume AI’s models are based on peer-reviewed scientific research. The emotional categories and datasets are derived from large-scale cross-cultural studies, ensuring the model is inclusive and grounded in human behavioral science.

6. Privacy and Ethical Standards

Hume AI is built around privacy-first principles. Their models run on-device or with strict data controls, and their research ethics prioritize well-being, consent, and fairness in AI deployment.

7. Real-Time Analysis and SDKs

The platform includes SDKs and tools that enable developers to integrate emotional understanding into apps in real-time, with support for mobile, web, and enterprise environments.

8. Developer-Friendly Interface

The Hume AI API comes with detailed documentation, sample code, and quick-start guides, making it accessible for startups, researchers, and enterprise developers.

How It Works

Hume AI’s emotional intelligence platform operates through a cloud-based API that can be integrated into various applications via simple REST endpoints or SDKs.

Data Input

Users send audio or video data to the API. The system supports real-time streaming or batch analysis of pre-recorded files.Signal Processing

Hume AI uses deep learning models trained on massive, annotated datasets of human expressions. It analyzes acoustic signals (tone, pitch, intensity) and visual cues (facial movements, expressions) to decode emotional states.Emotion Scoring

The API returns a JSON response with a range of emotion expression scores across multiple dimensions. For voice input, it may return probabilities for emotions like amusement, determination, pride, disappointment, and more.Interpretation and Integration

Developers can use these scores to adapt UI/UX elements, generate dynamic responses, or trigger certain workflows in their applications. For example, if a customer’s tone suggests frustration, a chatbot can respond more empathetically or escalate to a human agent.Real-Time Feedback Loops

In continuous applications like virtual therapy or educational tools, Hume AI allows systems to dynamically adjust based on ongoing emotional feedback.

Use Cases

1. Customer Support and Experience

Companies use Hume AI to enhance virtual assistants and chatbots by detecting customer emotions. If a user sounds frustrated or upset, the system can offer alternative solutions, escalate the issue, or change its tone.

2. Mental Health and Wellness Apps

Therapy and wellness platforms can integrate Hume AI to track emotional well-being through voice diaries or user check-ins, offering a more personalized support system.

3. Education and eLearning

EdTech platforms use emotional feedback to gauge student engagement, confusion, or enthusiasm during lessons. This allows instructors or AI tutors to adjust teaching strategies in real time.

4. Healthcare and Remote Monitoring

Doctors and therapists can use emotion-aware systems to monitor patient mood and stress levels, especially in remote care settings. This is valuable in managing chronic conditions, post-operative care, or mental health treatment.

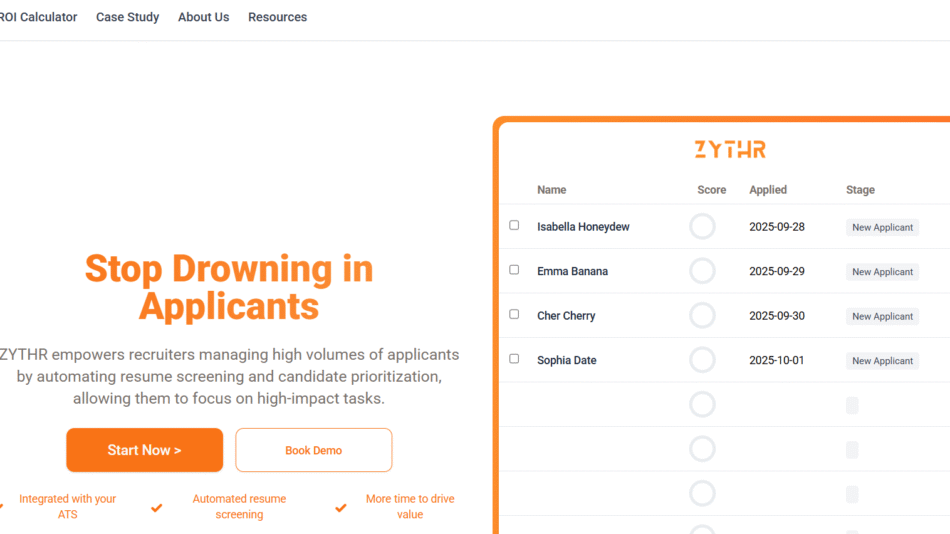

5. Human Resources and Hiring

In recruitment, Hume AI can assist in evaluating interview dynamics by analyzing tone and expressions. It provides an additional layer of insight alongside traditional assessment methods.

6. Gaming and Virtual Characters

Gaming studios use emotion recognition to make NPCs (non-player characters) react more realistically based on player tone and behavior, creating immersive gameplay experiences.

7. Research and Behavioral Science

Researchers use Hume AI for emotion annotation, cross-cultural expression studies, and large-scale psychological experiments that require scalable emotional analysis tools.

8. Accessibility Tools

Emotion-aware systems can support people with autism or communication difficulties by interpreting the emotional states of others and providing feedback in real time.

Pricing

As of the current information on Hume AI’s website, pricing is available upon request, and the platform offers custom enterprise plans based on usage, support, and integration needs.

Pricing tiers typically depend on:

Number of API calls

Real-time vs. batch processing

Data type (voice, video, text)

Custom model training or adaptation

Enterprise support levels

Strengths

Scientifically grounded emotion models

Multimodal support (voice, facial expressions, and text)

Real-time and developer-friendly APIs

Strong focus on ethics and privacy

Customizable and scalable for enterprise use

Applicable across diverse industries

Accurate, nuanced emotion recognition beyond simple sentiment

Drawbacks

No public self-serve tier or open pricing as of now

Still under active development; access is limited

Requires high-quality input data (e.g., clean audio) for best results

Emotional scoring is probabilistic—not deterministic

Interpretation and ethical use depend heavily on implementation

Comparison with Other Tools

Hume AI operates in the emerging field of emotion AI, alongside tools like Affectiva, Beyond Verbal, and Microsoft Azure Emotion API. However, Hume differentiates itself in several key areas:

Affectiva focuses heavily on automotive and in-cabin monitoring, while Hume targets broader human-machine interaction use cases.

Beyond Verbal specializes in voice emotion analytics but does not offer the same multimodal capabilities.

Microsoft’s Emotion API offers basic facial emotion detection but lacks the scientific depth and expressivity granularity that Hume AI provides.

In short, Hume AI stands out for its scientific foundation, ethical commitment, and multimodal emotional intelligence.

Customer Reviews and Testimonials

Because Hume AI is primarily a research-driven and enterprise-facing platform, it is not widely reviewed on public platforms like G2 or Product Hunt. However, its tools have received praise in academic circles and from early adopters in health tech, education, and AI development.

Feedback highlights include:

“Hume AI’s models capture nuances in emotion that other APIs miss.”

“It’s like giving empathy to machines.”

“We’ve seen stronger engagement in our product since implementing emotional feedback.”

Hume AI’s founder, Dr. Alan Cowen, is a widely published researcher in affective science, and the company’s work is regularly cited in peer-reviewed journals and scientific conferences.

Conclusion

Hume AI is redefining the way machines understand humans. With its unique focus on emotional intelligence, rooted in scientific research and ethical design, Hume AI brings a new layer of empathy and personalization to human-computer interaction.

Whether you’re building a mental health platform, a voice assistant, or a virtual classroom, Hume AI provides the tools to make your product emotionally aware, ethically sound, and deeply human-centric.