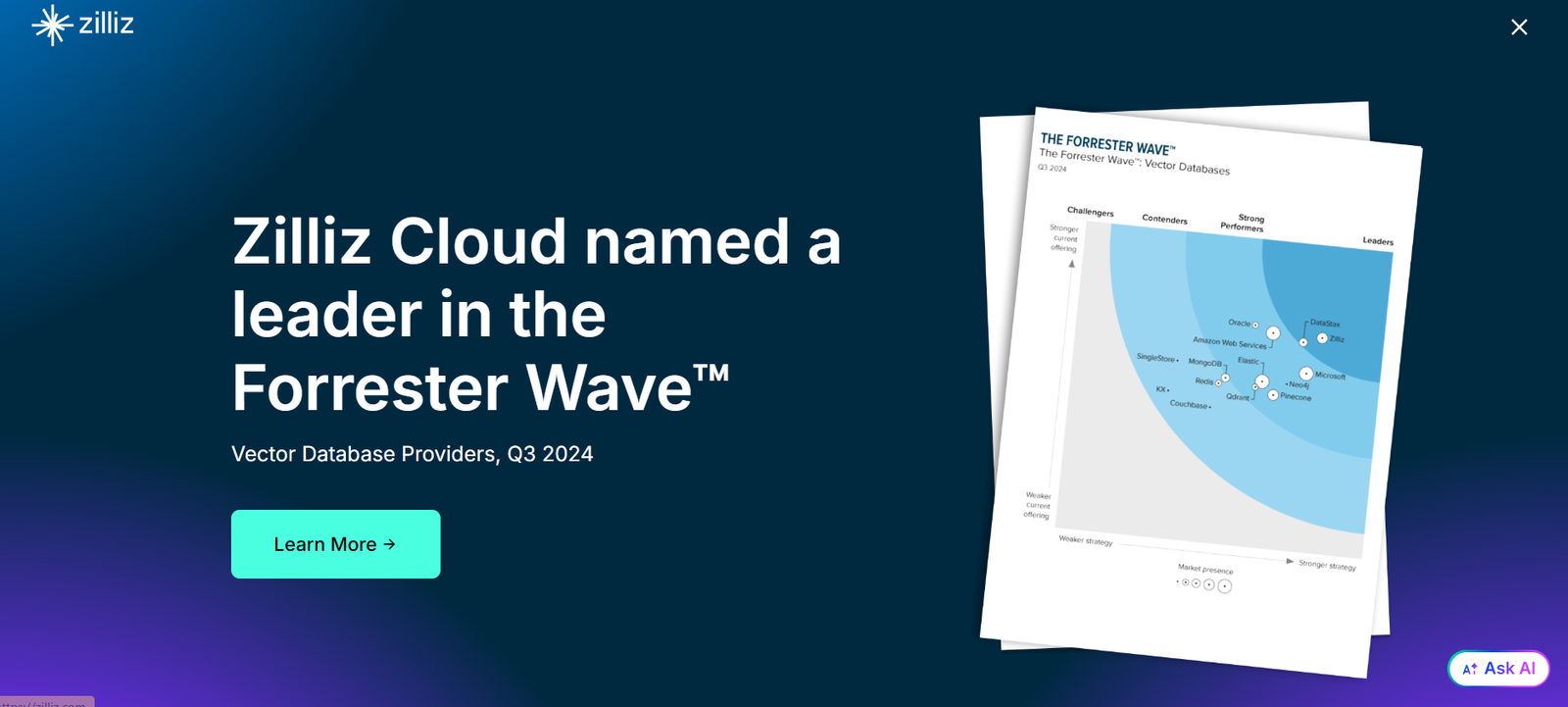

Zilliz is a high-performance, cloud-native vector database designed to power AI applications, including similarity search, recommendation systems, and retrieval-augmented generation (RAG). Built by the creators of Milvus, the world’s most widely used open-source vector database, Zilliz offers a fully managed platform optimized for fast, scalable, and accurate vector search.

Zilliz is purpose-built to support AI-driven workloads by enabling fast querying of vector embeddings generated from machine learning models. It is ideal for applications involving unstructured data such as text, images, audio, and video. The platform is designed to integrate seamlessly into LLM pipelines, making it a strong choice for teams developing semantic search tools, RAG systems, and AI agents.

With a fully managed cloud infrastructure, automatic scaling, and enterprise-grade security, Zilliz eliminates the operational overhead of managing vector databases and allows developers to focus on building intelligent applications.

Zilliz: Features

Zilliz includes a robust set of features tailored for AI, ML, and data-intensive applications.

Vector Database as a Service – Fully managed cloud-native vector database with automated deployment, scaling, and updates.

Milvus-Powered Backend – Built on Milvus, ensuring compatibility and high performance for vector similarity search.

Hybrid Search – Supports both vector similarity and scalar filtering for complex queries on both unstructured and structured data.

Multi-Modal Vector Support – Handles vectors from text, image, audio, video, and other machine learning embeddings.

High-Performance ANN Search – Utilizes optimized Approximate Nearest Neighbor (ANN) algorithms like HNSW and IVF for fast and accurate search.

Scalability – Horizontally scalable to handle billions of vector records across distributed infrastructure.

Data Persistence and Durability – Ensures high availability and automatic backups with cloud-native architecture.

Built-in Integration with LLMs – Designed to support retrieval-augmented generation pipelines using OpenAI, Cohere, Hugging Face, and other providers.

RESTful and gRPC APIs – Offers easy-to-use APIs and SDKs for Python, JavaScript, and more, enabling seamless integration.

Access Control and Security – Includes authentication, encryption, and fine-grained access controls suitable for enterprise environments.

Zero Ops Infrastructure – Users can create collections, insert data, and query vectors without worrying about server setup, scaling, or maintenance.

Zilliz: How It Works

Zilliz works by storing high-dimensional vectors representing unstructured data and enabling fast similarity search over those vectors. The vectors are typically generated using embedding models from natural language processing, computer vision, or other machine learning frameworks.

To use Zilliz, developers begin by creating a collection, which is similar to a database table. Each collection contains vector fields along with optional metadata fields. Vectors are inserted into the collection using the API, and scalar attributes (like tags or categories) can be used for filtering.

Zilliz indexes these vectors using approximate nearest neighbor algorithms for efficient search. When a query is submitted—such as a text prompt, image embedding, or sentence—the system compares the input vector to stored vectors and returns the most similar results, ranked by distance or similarity score.

For RAG systems, Zilliz can be connected to LLM pipelines where a user prompt is embedded, the top relevant chunks of context are retrieved from Zilliz, and then the information is passed to a language model for response generation. This setup is used widely for enterprise search, knowledge bases, chatbots, and AI assistants.

All infrastructure is handled by Zilliz Cloud, meaning developers can scale to billions of vectors without managing nodes, replication, or indexing settings manually.

Zilliz: Use Cases

Zilliz supports a wide array of AI-driven use cases across industries.

Retrieval-Augmented Generation – Power LLMs with real-time, contextually relevant knowledge using vector retrieval from Zilliz.

Semantic Search – Enable search experiences that match content based on meaning rather than exact keywords, ideal for documents, media, or customer support.

Recommendation Systems – Use vector similarity to suggest products, content, or users based on behavior or embeddings.

Image and Video Search – Store image or video embeddings for similarity-based retrieval in media, fashion, or surveillance applications.

Voice and Audio Search – Search across audio or voice data using vectorized audio embeddings for call centers or transcription tools.

Chatbots and Virtual Agents – Feed relevant data to AI assistants from Zilliz to improve response accuracy and reduce hallucination.

Enterprise Knowledge Management – Index internal documents and data sources into Zilliz for secure, AI-enhanced information retrieval.

E-commerce and Retail – Implement vector-based product search, visual recommendations, and personalization.

Security and Threat Detection – Detect behavioral anomalies by comparing event vectors in real time.

Healthcare and Life Sciences – Retrieve similar patient records, research articles, or diagnostic images based on semantic similarity.

Zilliz: Pricing

Zilliz offers a free tier and usage-based pricing for its cloud service, Zilliz Cloud. Pricing is based on resources consumed, including compute, storage, and query volume.

Free Tier – Includes a limited number of operations per month, making it ideal for testing, development, or proof-of-concept projects.

Pay-as-You-Go – Charges are based on the number of collections, vector dimensions, queries, and storage usage. Pricing is fully transparent and can be estimated via their cloud console.

Enterprise Plans – Custom pricing for organizations requiring higher performance, SLAs, advanced security, and dedicated infrastructure.

Key pricing factors include:

Storage used (based on the number and size of vectors)

Query operations per second (QPS)

Read/write operations

Data retention and backup

Region and availability zone preferences

Billing is monthly and automatically scales with usage. Developers can track usage in real-time through the Zilliz Cloud dashboard.

Zilliz: Strengths

Zilliz offers several compelling strengths that make it a preferred platform for developers building AI-powered applications.

Fully Managed – No need to manage nodes, indexing, or replication—Zilliz handles all backend infrastructure automatically.

Built on Milvus – Leverages the maturity and community backing of Milvus, the leading open-source vector database.

AI-Native Design – Built specifically for AI applications like semantic search, RAG, and personalization.

Multi-Modal Support – Handles vectors from text, images, audio, and more without added configuration.

Scalable and Fast – Optimized for high-throughput vector ingestion and low-latency querying across billions of records.

Enterprise Ready – Supports SSO, access controls, encryption, and audit logs for secure deployment.

Developer-Friendly – Intuitive APIs, clear documentation, and SDKs for fast integration and testing.

Global Cloud Access – Deployed across multiple regions for low-latency access and high availability.

Free Tier – Allows developers to start experimenting without cost barriers.

Zilliz: Drawbacks

While Zilliz is a powerful platform, there are some considerations to keep in mind.

Cloud-Only – Currently, Zilliz Cloud is the focus; on-premise users may prefer Milvus for full control.

Limited Advanced UI – Some users may prefer more advanced visual dashboards or GUI tools for non-developers.

Usage-Based Pricing – Costs may increase with high query volume or large-scale deployments, so monitoring usage is important.

Less Suitable for Non-AI Search – Traditional keyword-based search without embedding models is not Zilliz’s primary use case.

Learning Curve – Users unfamiliar with embeddings, vector indexing, or ANN algorithms may require some time to get up to speed.

Dependency on External Models – Performance depends on quality of the embedding models used, which are often hosted externally (e.g., OpenAI).

Zilliz: Comparison with Other Tools

Zilliz is often compared to other vector databases like Pinecone, Weaviate, Milvus, and FAISS.

Compared to Pinecone, Zilliz offers an open-source foundation via Milvus and emphasizes hybrid search and multi-modal support. Both offer fully managed services, but Pinecone focuses more on simplicity while Zilliz offers deeper integration with open standards.

Versus Weaviate, Zilliz focuses more on raw performance, cloud scalability, and multi-modal support, while Weaviate includes native GraphQL support and modular plugin-based features.

Compared to FAISS, Zilliz is a full database, not just a library. FAISS requires building your own infrastructure, while Zilliz offers a complete managed solution.

Milvus, which Zilliz is built on, is the open-source engine suitable for self-hosted deployments. Zilliz Cloud is the production-grade managed service built on top of Milvus with added security, scaling, and support features.

Zilliz: Customer Reviews and Testimonials

Zilliz has received positive reviews from developers and data teams working with LLMs, RAG pipelines, and AI infrastructure.

Early adopters appreciate the fully managed cloud experience, calling it a “huge time-saver” and praising its ease of setup. One developer commented, “Zilliz let us go from prototype to production in a week with zero backend hassle.”

Use cases highlighted by users include document search, chatbot development, e-commerce recommendations, and enterprise search systems.

The developer community praises Zilliz for its fast vector search performance, reliability at scale, and responsiveness to product feedback. Backed by the success of Milvus and support from a strong engineering team, Zilliz continues to grow its user base in both startups and enterprises.

Conclusion

Zilliz is a powerful and scalable vector database designed to meet the needs of AI applications in the modern era. Built by the creators of Milvus and delivered as a fully managed cloud service, Zilliz enables developers to build high-performance semantic search, recommendation systems, and LLM-powered assistants without managing complex infrastructure.

With its robust feature set, including support for multi-modal data, real-time ANN search, and seamless LLM integration, Zilliz provides a strong foundation for AI-driven innovation. Whether you’re building a RAG pipeline, developing a chatbot, or enhancing product discovery, Zilliz offers the tools and scalability required to support production workloads.

For teams seeking a fast, reliable, and cloud-native vector database built for AI, Zilliz is a leading choice that combines the power of open-source with the convenience of fully managed services.