Hugging Face is an open-source machine learning platform that provides developers and researchers with access to state-of-the-art AI models, datasets, and tools. Best known for its Transformers library, Hugging Face has become a central hub for natural language processing (NLP), computer vision, and other machine learning applications.

The platform hosts thousands of pre-trained models and datasets, enabling users to build, share, and deploy AI applications quickly and efficiently. It supports a broad ecosystem of tools designed for training, fine-tuning, evaluating, and deploying machine learning models in both research and production environments.

Hugging Face has grown into a vibrant community that emphasizes transparency, collaboration, and democratization of AI, with contributions from academia, industry, and independent developers worldwide.

Features

Hugging Face offers a robust suite of tools and services designed to streamline machine learning development and deployment.

Transformers Library

A widely used Python library offering access to thousands of pre-trained models for NLP, vision, and audio tasks.

Model Hub

Central repository for hosting and accessing models, with versioning, tags, and usage statistics.

Datasets Hub

Curated and community-contributed datasets for text, image, speech, and multimodal learning tasks.

Inference API

Deploy models from the Model Hub via a scalable, cloud-hosted API without setting up infrastructure.

AutoTrain

No-code and low-code interface to train and fine-tune models on custom datasets.

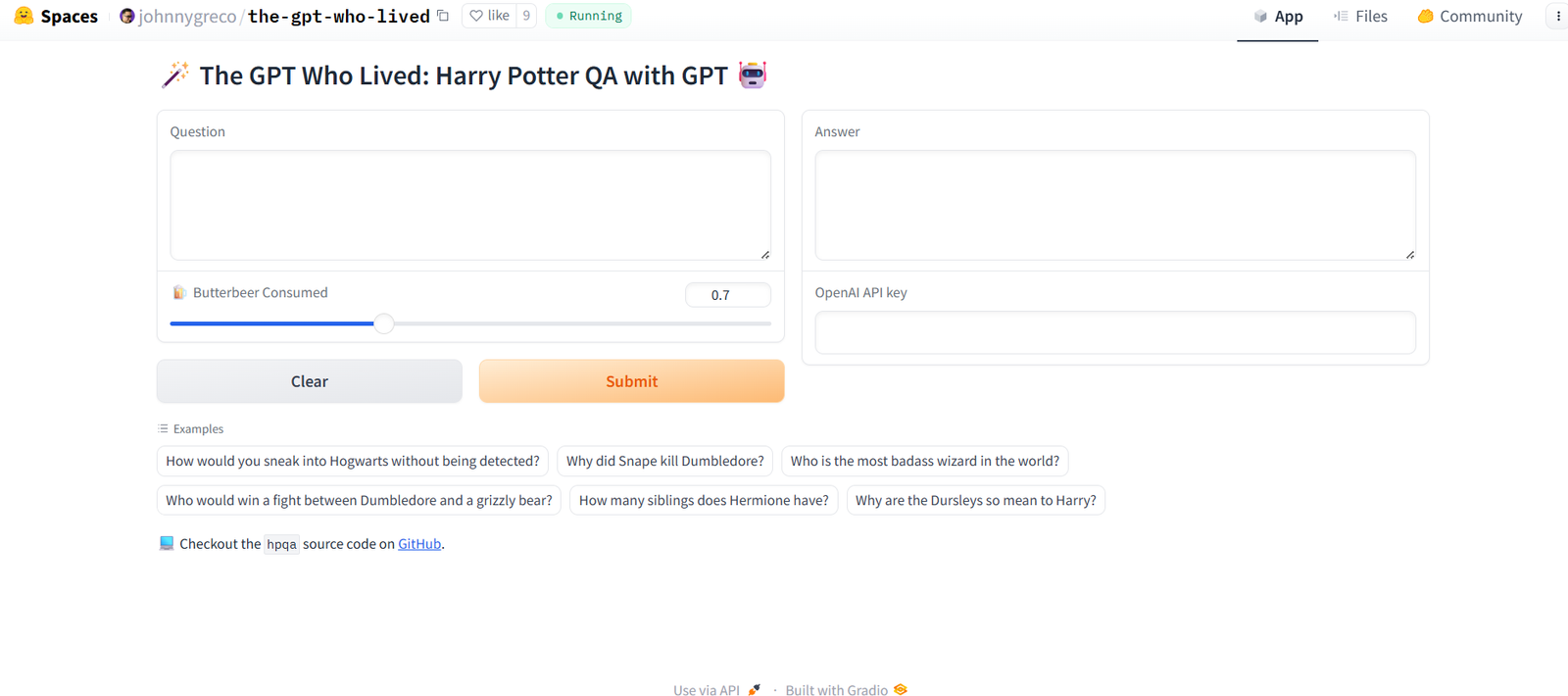

Spaces

A platform to build and share interactive ML demos using Streamlit, Gradio, or other web frameworks.

Tokenizers

Fast and efficient tokenization tools for text processing, designed for both research and production use.

Evaluation Tools

Metrics and benchmark frameworks to test and compare model performance across tasks.

Open Source Libraries

Includes transformers, datasets, tokenizers, accelerate, and diffusers, each focused on different stages of the ML lifecycle.

Community and Documentation

Comprehensive tutorials, active forums, and GitHub repositories support both beginners and experts.

How It Works

Hugging Face works by providing users access to a collection of model and dataset repositories, which can be used directly via APIs or downloaded locally. Users can browse models by task (e.g., sentiment analysis, translation, image classification), framework (e.g., PyTorch, TensorFlow), or modality (text, image, audio).

Developers can use the Transformers library to load and fine-tune models with just a few lines of code. For those without coding experience, Hugging Face’s AutoTrain allows training and deployment via an intuitive web interface. With Spaces, users can create and host interactive ML demos to showcase their models.

For enterprise use, Hugging Face offers private model hosting, inference endpoints, and integrations with major cloud providers. Collaboration features such as team accounts and version control ensure seamless development workflows.

Whether you are training a model from scratch, fine-tuning on a specific task, or simply running inference with pre-trained models, Hugging Face provides a unified platform to manage the complete ML pipeline.

Use Cases

Hugging Face supports a wide variety of use cases across industries and research domains.

Natural Language Processing

Deploy models for sentiment analysis, summarization, translation, and question answering.

Computer Vision

Use pre-trained models for image classification, object detection, and visual question answering.

Text Generation

Leverage large language models (LLMs) for chatbots, content creation, and code generation.

Conversational AI

Build intelligent virtual assistants using Transformers-based dialogue systems.

Speech and Audio

Run speech-to-text, voice recognition, and audio classification tasks with pre-trained models.

Multimodal Applications

Combine text, image, and audio inputs for complex use cases like captioning and classification.

Healthcare NLP

Apply biomedical-specific models for clinical document analysis and entity recognition.

Education and Research

Accelerate machine learning research with open-access models, datasets, and reproducible tools.

AI Prototyping

Use Spaces to rapidly build and test machine learning applications with interactive UIs.

Enterprise Deployment

Integrate Hugging Face models with private cloud infrastructure for secure, scalable inference.

Pricing

Hugging Face offers both free and paid plans depending on usage and enterprise requirements.

Free Tier

Access to public models, datasets, and Spaces. Includes limited usage of hosted inference APIs.

Pro Plan

Starting at $9/month, includes priority support, increased API limits, and private repositories.

Enterprise Plan

Custom pricing for advanced use cases, offering private model hosting, custom SLAs, team collaboration features, and deployment to private cloud or on-prem environments.

AutoTrain and Inference Endpoints

Billed based on usage, with pricing determined by the number of training hours, compute resources, and API calls.

Full pricing information is available on the Hugging Face Pricing Page.

Strengths

Largest and most active open-source repository for pre-trained models and datasets.

Extensive support for NLP, vision, audio, and multimodal applications.

Accessible to both developers and non-technical users through intuitive tools.

Strong community contributions and partnerships with leading AI research labs.

Rapid deployment via hosted APIs and inference endpoints.

Cross-framework compatibility with PyTorch, TensorFlow, and JAX.

Frequent updates and support for cutting-edge models like BERT, GPT, T5, and CLIP.

Well-documented libraries with beginner-friendly tutorials and examples.

Drawbacks

Hosted inference can become expensive at scale for high-volume applications.

Some models require significant computational resources to fine-tune or deploy locally.

AutoTrain is limited in customization compared to traditional ML pipelines.

Performance and latency depend on hosted infrastructure and compute quotas.

Requires technical understanding for advanced model development or optimization.

Public models may vary in quality or documentation, depending on contributor.

Comparison with Other Tools

Hugging Face competes with platforms like OpenAI, Google Vertex AI, and AWS SageMaker but focuses heavily on open-source accessibility and community-driven development.

Unlike OpenAI, which offers closed-source models via API, Hugging Face provides open-source versions of LLMs like BERT, DistilBERT, and Falcon, allowing full transparency and customization.

Compared to Google Vertex AI or AWS SageMaker, Hugging Face is more lightweight and flexible for researchers and startups who want faster experimentation without complex infrastructure.

In the academic and open-source AI community, Hugging Face is a preferred choice due to its transparency, modularity, and rich library ecosystem.

Customer Reviews and Testimonials

Hugging Face is widely praised across developer forums, GitHub, and AI conferences. It has become a foundational tool in the machine learning community, with over 100,000 stars on GitHub and partnerships with leading companies like Microsoft, AWS, Google, and Meta.

Developers appreciate the ease of use:

“Hugging Face lets me go from idea to prototype in minutes.”

“I use Transformers in all my NLP projects. The documentation and model hub are outstanding.”

Enterprises and research labs trust Hugging Face for mission-critical AI workflows, often highlighting its openness, support for reproducibility, and rapid community-driven innovation.

Conclusion

Hugging Face has established itself as the go-to platform for accessible, collaborative, and open-source AI development. Whether you’re a solo developer building chatbots or an enterprise deploying large-scale models, Hugging Face offers the tools, models, and infrastructure needed to build intelligent applications efficiently.

With a commitment to openness and usability, Hugging Face continues to democratize machine learning and accelerate innovation across industries and domains.