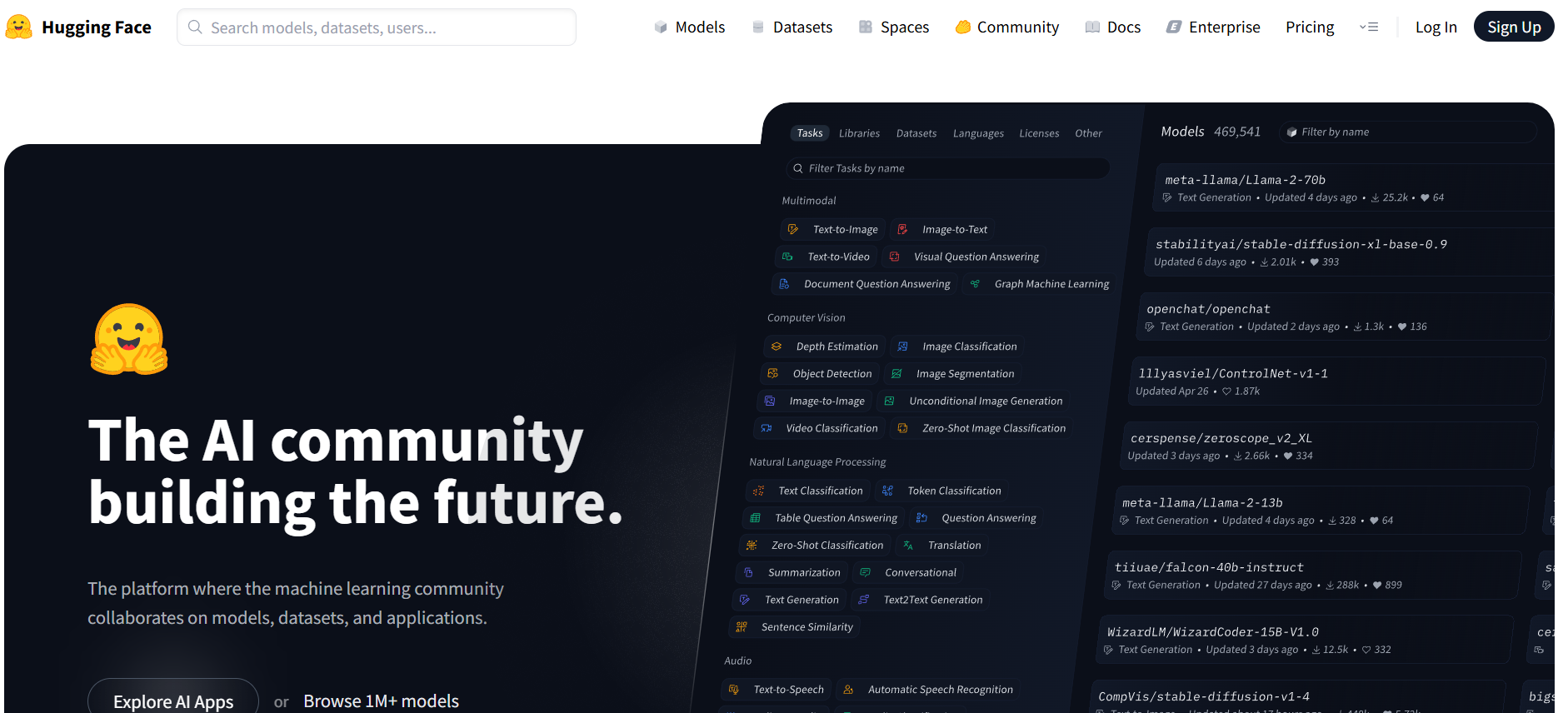

Hugging Face is a leading open-source platform that provides tools, models, and datasets for building and deploying machine learning applications. Originally focused on natural language processing (NLP), Hugging Face has evolved into a central hub for the AI community, supporting tasks across NLP, computer vision, speech processing, and reinforcement learning.

At the core of Hugging Face is the Transformers library, a widely used Python package that allows developers to access and implement pre-trained models from leading research institutions. The company also offers Datasets, Tokenizers, Accelerate, Diffusers, and other libraries that simplify model training and deployment. In addition to software, Hugging Face hosts a collaborative Model Hub, where users can share, discover, and use over 500,000 pre-trained models.

Whether you’re a data scientist, ML engineer, researcher, or developer, Hugging Face provides the infrastructure and resources needed to experiment, fine-tune, and scale AI solutions in both academic and enterprise environments.

Features

Hugging Face offers a wide suite of features tailored to modern machine learning workflows.

Model Hub: A central repository with over 500,000 models for tasks like text classification, translation, summarization, image generation, audio transcription, and more.

Transformers Library: Access to pre-trained transformer-based models including BERT, GPT, T5, RoBERTa, Stable Diffusion, and CLIP, with simple APIs for inference and fine-tuning.

Datasets Library: A collection of thousands of ready-to-use datasets optimized for NLP, computer vision, and audio tasks, compatible with PyTorch, TensorFlow, and JAX.

Inference API: Instantly deploy models for inference via cloud-hosted APIs without needing to manage infrastructure.

Spaces: Create and share interactive ML applications and demos built with Gradio or Streamlit, directly within the Hugging Face platform.

AutoTrain: A low-code tool that automates model training, evaluation, and deployment using minimal user input.

Accelerate Library: A framework that simplifies multi-GPU and distributed training for large-scale model development.

Tokenizers Library: Efficient, customizable tokenizers built for speed and scalability in NLP pipelines.

Diffusers Library: Tools for working with diffusion models such as Stable Diffusion, enabling high-quality image generation.

Hub for Research and Open Collaboration: Researchers and institutions share cutting-edge models, contributing to the global open-source AI movement.

How It Works

Hugging Face works by providing a unified platform that connects developers with pre-trained models, training tools, datasets, and deployment resources. Users can browse the Model Hub to find state-of-the-art models categorized by task, language, and framework. Once selected, these models can be integrated into applications using Python libraries such as Transformers or deployed using the Inference API.

Developers can also upload their own models to the hub, making them public or private. Training custom models is simplified through Hugging Face’s AutoTrain and Accelerate tools, which abstract away much of the setup required for GPU and cloud-based training.

For real-time demos or UI-based deployments, users can create Spaces—web-based interfaces powered by open-source tools like Gradio or Streamlit. This makes it easy to showcase AI capabilities without building front-end infrastructure.

All tools are available via GitHub, with active community support and thorough documentation to help users onboard quickly.

Use Cases

NLP Applications: Build chatbots, sentiment analyzers, summarizers, and language translators using pre-trained language models.

Text Generation: Implement AI writing assistants, code generators, or creative writing tools powered by transformer models like GPT.

Computer Vision: Develop object detection, image classification, and generative art applications using models like CLIP or Stable Diffusion.

Speech Processing: Create transcription, voice recognition, and text-to-speech tools using models for automatic speech recognition (ASR).

Academic Research: Reproduce papers, benchmark models, and contribute to the open science community with reproducible results and shared resources.

Enterprise AI Development: Accelerate production AI workflows with hosted models, secure API endpoints, and scalable infrastructure.

Education and Training: Teach ML concepts and run hands-on demos through Hugging Face Spaces in classroom or workshop settings.

Pricing

Hugging Face offers both free and paid plans depending on user needs:

Free Tier:

Access to open-source libraries and community-shared models

Free use of Hugging Face Spaces (with limited resources)

Community support via GitHub and forums

Pro Plan – $9/month:

Increased limits on Inference API usage

Priority support

More compute resources for Spaces

Lab/Team/Enterprise Plans – Custom Pricing:

Designed for teams, research labs, and enterprises

Includes private model hosting, collaboration features, usage analytics, and SLA-backed support

Access to GPU-powered Spaces and training environments

Integration with private repositories and secure APIs

Full pricing details are available on huggingface.co/pricing

Strengths

Open-Source Leadership: Hugging Face is a pioneer in democratizing AI research and tools through open collaboration and transparency.

Extensive Model Hub: Thousands of pre-trained models for NLP, vision, audio, and more, with regular community contributions.

Powerful Ecosystem: Includes tools for training, deployment, tokenization, inference, and dataset management.

Low Barrier to Entry: Clean APIs and automation tools allow even non-experts to use state-of-the-art models.

Scalable Deployment: Hosted inference APIs and Spaces enable real-world applications without heavy infrastructure setup.

Active Community: Contributions from top researchers, developers, and institutions ensure models are up-to-date and well-maintained.

Educational Value: Widely used in academic courses, bootcamps, and online tutorials due to its ease of use and documentation.

Drawbacks

Resource Limits on Free Tier: Users relying on free APIs or Spaces may find limitations on usage and compute power.

Requires Some Technical Knowledge: While beginner-friendly, full utilization of training tools and distributed computing requires intermediate Python and ML skills.

Enterprise Features Are Paid: High-performance hosting, custom support, and private Spaces are only available in paid tiers.

Limited Model Curation: As the platform is open-source, model quality and documentation can vary depending on the contributor.

Comparison with Other Tools

Compared to OpenAI:

OpenAI offers powerful APIs for text and image generation but operates under a closed-source model. Hugging Face provides more transparency, open-source tools, and flexibility for custom training.

Compared to TensorFlow Hub:

TensorFlow Hub is focused on TensorFlow-compatible models, while Hugging Face supports multiple backends (PyTorch, TensorFlow, JAX) and offers a more diverse model catalog.

Compared to Cohere or Anthropic:

These platforms offer proprietary large language models via API, whereas Hugging Face lets you train, fine-tune, and host your own models with full control.

Compared to GitHub Copilot:

Copilot is focused on code generation via a proprietary model. Hugging Face provides models for coding tasks, but also supports far broader AI applications across domains.

Customer Reviews and Testimonials

Hugging Face is widely praised by developers, researchers, and data scientists around the world. It has thousands of five-star ratings on GitHub and a strong presence in tech forums and AI communities.

A machine learning engineer shared:

“Hugging Face has completely changed how we build NLP products. From transformers to datasets, everything we need is in one place.”

An academic researcher noted:

“We used Hugging Face to replicate state-of-the-art results for our NLP paper and share our model with the community. It’s the gold standard for reproducible ML.”

A startup founder commented:

“With Hugging Face’s APIs and Spaces, we were able to launch an AI-powered demo in under a day, saving weeks of development time.”

The consistent feedback emphasizes Hugging Face’s utility, reliability, and positive impact on accelerating machine learning innovation.

Conclusion

Hugging Face is more than just a model hub—it’s a complete ecosystem for modern AI development. With its open-source philosophy, powerful libraries, and collaborative platform, Hugging Face makes state-of-the-art machine learning accessible to developers, researchers, and organizations of all sizes.

Whether you’re prototyping an AI application, fine-tuning a transformer model, or deploying an interactive demo, Hugging Face provides the tools and community to bring your ideas to life. Its combination of flexibility, scalability, and openness makes it a cornerstone in the evolving world of artificial intelligence.